On average, a Human-AI team will almost always outperform either a human or an AI performing alone. As discussed in my last article “The Future of Human-AI Teams”, humans and AIs often make different kinds of mistakes, meaning one can catch when the other has made a mistake, leading to better performance of the team.

However, these teams are still not perfect. In fact, there are some situations that exist where a Human-AI team will perform worse than either agent independently. How could that be? Shouldn’t the lowest possible performance be the lowest performance of the human or AI? Counterintuitively, it’s not.

This picture was entirely created by a text-to-image AI with the theme “Trust in Automation”

Trust in Automation

Too Much Trust

Before we get into the weeds of how it’s possible to have a Human-AI team perform worse, we need to take a detour to understand how the teams behave and what kind of motivating factors affects the dynamic of the team. One of the most important factors that have been identified is trust in automation. By this I mean, how much do you trust a computer’s opinion or recommendation over your own. Some hopefully optimistic people believe that they should always fully trust computers since they should be perfect and never make mistakes. This is called the perfect automation schema. It’s a belief that automation can never fail and that it will always do what you intend it to do. This is also what more recently is being called over-trust in a system.

By over-trusting a system, we assume that the automation isn’t making any mistakes at all, meaning we entirely miss the mistakes it is making. Worse is that we can be deceived into believing that the system is still trust-wrothy if the system is built in a way that allows users to see some of its decisions, but not all. If the decisions seen by the human seem reasonable it further reinforces to them that everything is working as intended.

Too Little Trust

Some would think that the answer to this problem is to not trust the system at all, but that would also be fundamentally flawed. Even if the AI isn’t perfect, not considering its recommendations would mean that you would potentially overlook mistakes that you made that the AI was able to catch. This is often called under-trust of the system, and depending on the design of the system it could lead to even more errors on your part than had you decided to trust the system. Through lack of trust in the system, it is possible for a human to become overconfident in their choices, to the point that when information changes or is presented to the human that goes against their original decision, they will have a harder time adopting that new decision, even if it would be the right one to make. This creates a system that can perform even worse than had the human or AI tried to perform the task independently.

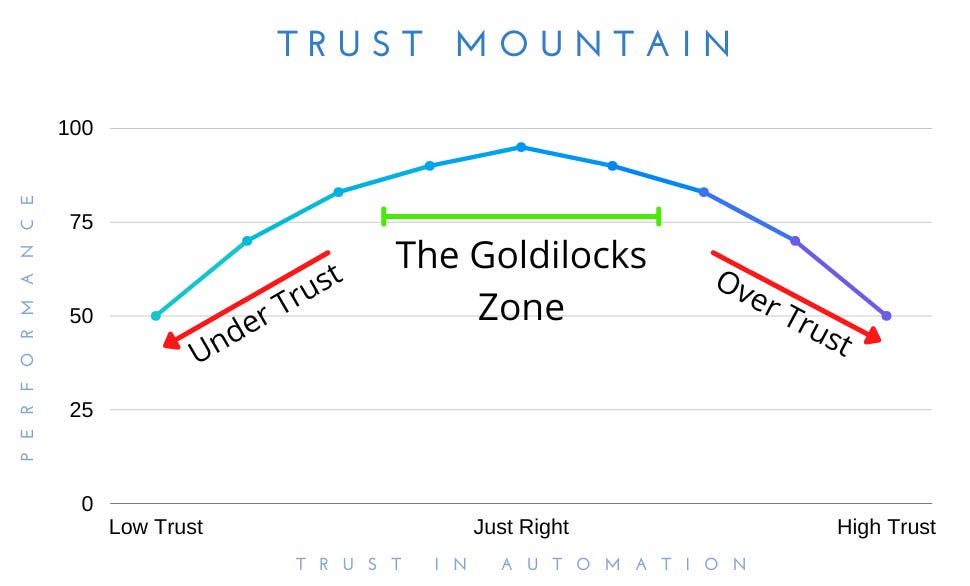

This is Trust Mountain

Trust Mountain is a graph that shows the relation between the trust someone has in a given automation, and the performance of the Human-AI team. Since under- and over-trust decrease performance of the system, it leaves a Goldilocks Zone where the user has the right amount of trust for the capabilities of the system. When you’re in this space, both agents perform in a way that compliments the other, leading to less mistakes and better performance.

How Do you Get to the Goldilocks Zone?

Most people don’t go into a situation with perfect knowledge of how much we should (or shouldn’t) trust automation. There are many things that contribute to how much someone will trust automation, such as age, previous experience with technology, self-confidence in the task at hand, and even some more arbitrary things like political belief! But despite where someone may start off in terms of their trust levels, it’s always possible for them to update their understanding of the system and thus how much trust they should have in the system.

One of the largest drivers of this updating is experience with the automation. Through use of the automation, people can generally get a good understanding of what the automation is able to handle and where it has weaknesses, thus allowing you to appropriately calibrate your trust. However, caution does need to be taken here in that it’s not just a matter of time, you need to actually see the performance of the AI. Especially if you fall on the side of the curve of under-trust, if you are “using” the AI but you never give it the chance to make decisions and make occasional errors then you will never be able to update your trust in the system.